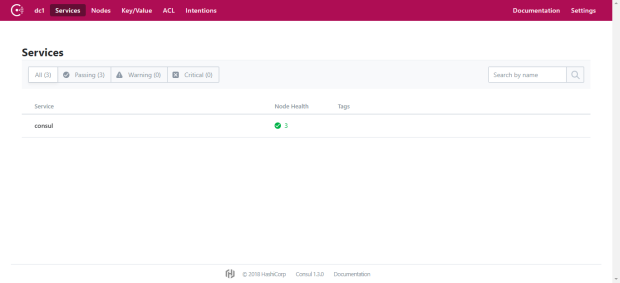

Playground: https://demo.consul.io/ui/dc1/services

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:7e:d8:13 brd ff:ff:ff:ff:ff:ff

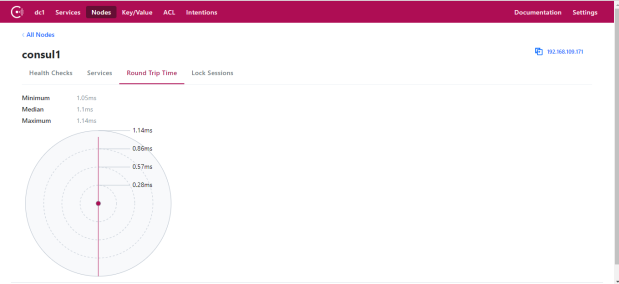

inet 192.168.109.171/24

[root@localhost ~]# hostnamectl set-hostname consul1.example.com

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:c1:70:53 brd ff:ff:ff:ff:ff:ff

inet 192.168.109.172/24

[root@localhost ~]# hostnamectl set-hostname consul2.example.com

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:6b:5a:8a brd ff:ff:ff:ff:ff:ff

inet 192.168.109.173/24

[root@consul1 ~]# vi /etc/hosts

192.168.109.171 consul1.example.com

192.168.109.172 consul2.example.com

192.168.109.173 consul3.example.com

[root@consul2 ~]# vi /etc/hosts

192.168.109.171 consul1.example.com

192.168.109.172 consul2.example.com

192.168.109.173 consul3.example.com

[root@consul3 ~]# vi /etc/hosts

192.168.109.171 consul1.example.com

192.168.109.172 consul2.example.com

192.168.109.173 consul3.example.com

Choose the consul version you required

https://releases.hashicorp.com/consul/

Install in all 3 nodes

[root@consul1 ~]# yum install vim telnet net-tools bind unzip wget -y

[root@consul1 ~]# unzip consul_1.3.0_linux_amd64.zip

Archive: consul_1.3.0_linux_amd64.zip

inflating: consul

[root@consul1 ~]# mv consul /usr/local/bin/

[root@consul1 ~]# consul version

Consul v1.3.0

Protocol 2 spoken by default, understands 2 to 3 (agent will automatically use protocol >2 when speaking to compatible agents)

[root@consul1 ~]# useradd -m consul

[root@consul1 ~]# passwd consul

Changing password for user consul.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

usermod -aG sudo consul

or

[root@consul1 ~]# usermod -aG wheel consul

[root@consul1 ~]# mkdir -p /etc/consul.d/server

[root@consul1 ~]# mkdir /var/consul

[root@consul1 ~]# chown consul: /var/consul

[root@consul1 ~]# consul keygen

foAFxW3BzNxCda3f8aDDxg==

[root@consul1 ~]# vim /etc/consul.d/server/config.json

{

“bind_addr”: “192.168.109.171“,

“datacenter”: “dc1”,

“data_dir”: “/var/consul”,

“encrypt”: “foAFxW3BzNxCda3f8aDDxg==”,

“log_level”: “INFO”,

“enable_syslog”: true,

“enable_debug”: true,

“node_name”: “consul1”,

“server”: true,

“bootstrap_expect”: 3,

“leave_on_terminate”: false,

“skip_leave_on_interrupt”: true,

“rejoin_after_leave”: true,

“retry_join”: [

“192.168.109.171:8301”,

“192.168.109.172:8301”,

“192.168.109.173:8301”

]

}

[root@consul2 ~]# useradd -m consul && passwd consul &&usermod -aG wheel consul && mkdir -p /etc/consul.d/server && mkdir /var/consul && chown consul: /var/consul

[root@consul2 ~]# cat /etc/consul.d/server/config.json

{

“bind_addr”: “192.168.109.172“,

“datacenter”: “dc1”,

“data_dir”: “/var/consul”,

“encrypt”: “foAFxW3BzNxCda3f8aDDxg==”,

“log_level”: “INFO”,

“enable_syslog”: true,

“enable_debug”: true,

“node_name”: “consul2”,

“server”: true,

“bootstrap_expect”: 3,

“leave_on_terminate”: false,

“skip_leave_on_interrupt”: true,

“rejoin_after_leave”: true,

“retry_join”: [

“192.168.109.171:8301”,

“192.168.109.172:8301”,

“192.168.109.172:8301”

]

}

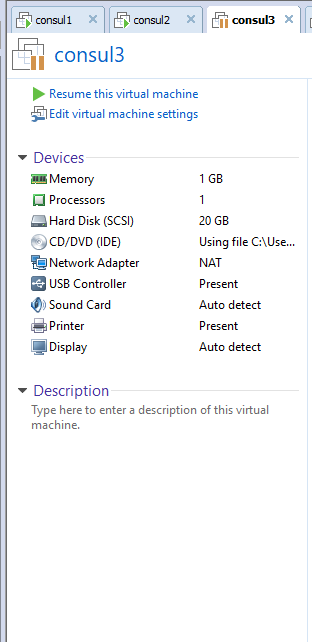

[root@consul3 ~]# useradd -m consul && passwd consul &&usermod -aG wheel consul && mkdir -p /etc/consul.d/server && mkdir /var/consul && chown consul: /var/consul

[root@consul3 ~]# cat /etc/consul.d/server/config.json

{

“bind_addr”: “192.168.109.173“,

“datacenter”: “dc1”,

“data_dir”: “/var/consul”,

“encrypt”: “foAFxW3BzNxCda3f8aDDxg==”,

“log_level”: “INFO”,

“enable_syslog”: true,

“enable_debug”: true,

“node_name”: “consul3”,

“server”: true,

“bootstrap_expect”: 3,

“leave_on_terminate”: false,

“skip_leave_on_interrupt”: true,

“rejoin_after_leave”: true,

“retry_join”: [

“192.168.109.171:8301”,

“192.168.109.172:8301”,

“192.168.109.173:8301”

]

}

[root@consul1 ~]# systemctl stop firewalld

[root@consul1 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@consul1 ~]# su consul

[consul@consul1 root]$

[consul@consul1 root]$ consul agent -config-dir /etc/consul.d/server/ -ui

bootstrap_expect > 0: expecting 3 servers

==> Starting Consul agent…

==> Consul agent running!

Version: ‘v1.3.0’

Node ID: ‘ff3a4ce5-0505-a9ef-d99a-718b1514ad8b’

Node name: ‘ConsulServer1’

Datacenter: ‘dc1’ (Segment: ‘<all>’)

Server: true (Bootstrap: false)

Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, gRPC: -1, DNS: 8600)

Cluster Addr: 192.168.109.171 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: true, TLS-Outgoing: false, TLS-Incoming: false

[root@consul2 ~]# su consul

[consul@consul2 root]$ consul agent -config-dir /etc/consul.d/server/

[root@consul3 ~]# su consul

[consul@consul3 root]$ consul agent -config-dir /etc/consul.d/server/

bootstrap_expect > 0: expecting 3 servers

==> Starting Consul agent…

==> Consul agent running!

Version: ‘v1.3.0’

Node ID: ‘d8c0d164-7ff0-f45f-bcf3-beea2e98e239’

Node name: ‘ConsulServer1’

Datacenter: ‘dc1’ (Segment: ‘<all>’)

Server: true (Bootstrap: false)

Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, gRPC: -1, DNS: 8600)

Cluster Addr: 192.168.109.173 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: true, TLS-Outgoing: false, TLS-Incoming: false

root@consul3 ~]# su consul

[consul@consul3 root]$ consul agent -config-dir /etc/consul.d/server/

WARNING: LAN keyring exists but -encrypt given, using keyring

WARNING: WAN keyring exists but -encrypt given, using keyring

bootstrap_expect > 0: expecting 3 servers

==> Starting Consul agent…

==> Consul agent running!

Version: ‘v1.3.0’

Node ID: ‘d8c0d164-7ff0-f45f-bcf3-beea2e98e239’

Node name: ‘consul3’

Datacenter: ‘dc1’ (Segment: ‘<all>’)

Server: true (Bootstrap: false)

Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, gRPC: -1, DNS: 8600)

Cluster Addr: 192.168.109.173 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: true, TLS-Outgoing: false, TLS-Incoming: false

[root@consul1 ~]# netstat -tlpn

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 192.168.109.171:8300 0.0.0.0:* LISTEN 1310/consul

tcp 0 0 192.168.109.171:8301 0.0.0.0:* LISTEN 1310/consul

tcp 0 0 192.168.109.171:8302 0.0.0.0:* LISTEN 1310/consul

tcp 0 0 127.0.0.1:8500 0.0.0.0:* LISTEN 1310/consul

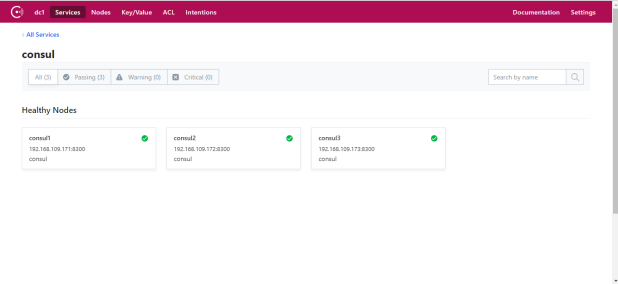

[root@consul1 ~]# consul members

Node Address Status Type Build Protocol DC Segment

consul1 192.168.109.171:8301 alive server 1.3.0 2 dc1 <all>

consul2 192.168.109.172:8301 alive server 1.3.0 2 dc1 <all>

consul3 192.168.109.173:8301 alive server 1.3.0 2 dc1 <all>

[root@consul1 ~]# consul monitor

2018/11/02 18:32:41 [INFO] raft: Initial configuration (index=0): []

2018/11/02 18:32:41 [INFO] serf: EventMemberJoin: consul1.dc1 192.168.109.171

2018/11/02 18:32:41 [INFO] serf: EventMemberJoin: consul1 192.168.109.171

2018/11/02 18:32:41 [INFO] agent: Started DNS server 127.0.0.1:8600 (udp)

2018/11/02 18:32:41 [INFO] raft: Node at 192.168.109.171:8300 [Follower] entering Follower state (Leader: “”)

2018/11/02 18:32:41 [INFO] serf: Attempting re-join to previously known node: ConsulServer1.dc1: 192.168.109.171:8302

2018/11/02 18:32:41 [INFO] serf: Attempting re-join to previously known node: ConsulServer1: 192.168.109.171:8301

2018/11/02 18:32:41 [INFO] consul: Adding LAN server consul1 (Addr: tcp/192.168.109.171:8300) (DC: dc1)

2018/11/02 18:32:41 [INFO] consul: Handled member-join event for server “consul1.dc1” in area “wan”

2018/11/02 18:32:41 [INFO] agent: Started DNS server 127.0.0.1:8600 (tcp)

2018/11/02 18:32:41 [INFO] agent: Started HTTP server on 127.0.0.1:8500 (tcp)

2018/11/02 18:32:41 [INFO] agent: started state syncer

2018/11/02 18:32:41 [INFO] agent: Retry join LAN is supported for: aliyun aws azure digitalocean gce k8s os packet scaleway softlayer triton vsphere

2018/11/02 18:32:41 [INFO] agent: Joining LAN cluster…

2018/11/02 18:32:41 [INFO] agent: (LAN) joining: [192.168.109.171:8301 192.168.109.172:8301 192.168.109.173:8301]

2018/11/02 18:32:41 [INFO] serf: Re-joined to previously known node: ConsulServer1: 192.168.109.171:8301

2018/11/02 18:32:41 [INFO] serf: Re-joined to previously known node: ConsulServer1.dc1: 192.168.109.171:8302

2018/11/02 18:32:42 [INFO] agent: (LAN) joined: 1 Err: <nil>

2018/11/02 18:32:42 [INFO] agent: Join LAN completed. Synced with 1 initial agents

2018/11/02 18:32:47 [INFO] serf: EventMemberJoin: consul2 192.168.109.172

2018/11/02 18:32:47 [INFO] consul: Adding LAN server consul2 (Addr: tcp/192.168.109.172:8300) (DC: dc1)

2018/11/02 18:32:47 [INFO] serf: EventMemberJoin: consul2.dc1 192.168.109.172

2018/11/02 18:32:47 [INFO] consul: Handled member-join event for server “consul2.dc1” in area “wan”

2018/11/02 18:32:49 [ERR] agent: failed to sync remote state: No cluster leader

2018/11/02 18:32:49 [WARN] raft: no known peers, aborting election

2018/11/02 18:32:51 [INFO] serf: EventMemberJoin: consul3 192.168.109.173

2018/11/02 18:32:51 [INFO] consul: Adding LAN server consul3 (Addr: tcp/192.168.109.173:8300) (DC: dc1)

2018/11/02 18:32:51 [INFO] consul: Found expected number of peers, attempting bootstrap: 192.168.109.173:8300,192.168.109.171:8300,192.168.109.172:8300

2018/11/02 18:32:51 [INFO] serf: EventMemberJoin: consul3.dc1 192.168.109.173

2018/11/02 18:32:51 [INFO] consul: Handled member-join event for server “consul3.dc1” in area “wan”

2018/11/02 18:32:56 [WARN] raft: Heartbeat timeout from “” reached, starting election

2018/11/02 18:32:56 [INFO] raft: Node at 192.168.109.171:8300 [Candidate] entering Candidate state in term 2

2018/11/02 18:32:56 [INFO] raft: Election won. Tally: 2

2018/11/02 18:32:56 [INFO] raft: Node at 192.168.109.171:8300 [Leader] entering Leader state

2018/11/02 18:32:56 [INFO] raft: Added peer d8c0d164-7ff0-f45f-bcf3-beea2e98e239, starting replication

2018/11/02 18:32:56 [INFO] raft: Added peer 8b472dc7-f7b8-825a-0600-4cf8152ecbf7, starting replication

2018/11/02 18:32:56 [INFO] consul: cluster leadership acquired

2018/11/02 18:32:56 [INFO] consul: New leader elected: consul1

2018/11/02 18:32:56 [WARN] raft: AppendEntries to {Voter 8b472dc7-f7b8-825a-0600-4cf8152ecbf7 192.168.109.172:8300} rejected, sending older logs (next: 1)

2018/11/02 18:32:56 [WARN] raft: AppendEntries to {Voter d8c0d164-7ff0-f45f-bcf3-beea2e98e239 192.168.109.173:8300} rejected, sending older logs (next: 1)

2018/11/02 18:32:56 [INFO] raft: pipelining replication to peer {Voter d8c0d164-7ff0-f45f-bcf3-beea2e98e239 192.168.109.173:8300}

2018/11/02 18:32:56 [INFO] raft: pipelining replication to peer {Voter 8b472dc7-f7b8-825a-0600-4cf8152ecbf7 192.168.109.172:8300}

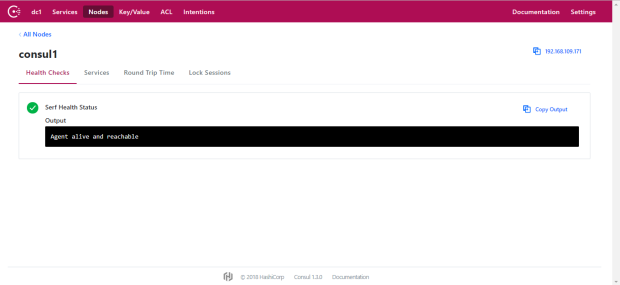

2018/11/02 18:32:56 [INFO] consul: member ‘consul1’ joined, marking health alive

2018/11/02 18:32:56 [INFO] consul: member ‘consul2’ joined, marking health alive

2018/11/02 18:32:56 [INFO] consul: member ‘consul3’ joined, marking health alive

2018/11/02 18:32:59 [INFO] agent: Synced node info

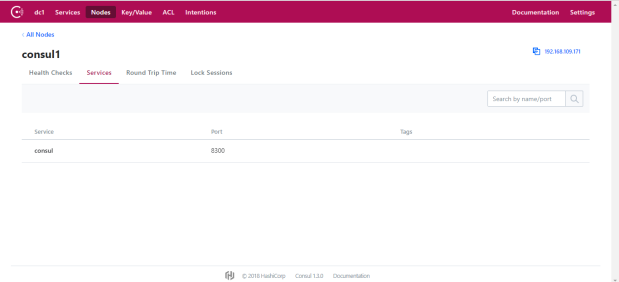

[root@consul1 ~]# consul services

Usage: consul services <subcommand> [options] [args]

This command has subcommands for interacting with services. The subcommands

default to working with services registered with the local agent. Please see

the “consul catalog” command for interacting with the entire catalog.

For more examples, ask for subcommand help or view the documentation.

Subcommands:

deregister Deregister services with the local agent

register Register services with the local agent

$ ssh -N -f -L 8500:localhost:8500 root@192.168.109.171

root@192.168.109.171’s password:

http://127.0.0.1:8500/ui/dc1/services

[root@consul1 ~]# ls -l /var/consul/

total 8

-rw-r–r–. 1 consul consul 394 Nov 2 18:17 checkpoint-signature

-rw——-. 1 consul consul 36 Nov 2 18:17 node-id

drwx——. 2 consul consul 27 Nov 2 18:44 proxy

drwxr-xr-x. 3 consul consul 56 Nov 2 18:17 raft

drwx——. 2 consul consul 94 Nov 2 18:17 serf

[root@consul1 ~]# cat /var/consul/checkpoint-signature

b2380c5f-abc4-cffe-6bea-1991ceb16a6c

This signature is a randomly generated UUID used to de-duplicate

alerts and version information. This signature is random, it is

not based on any personally identifiable information. To create

a new signature, you can simply delete this file at any time.

See the documentation for the software using Checkpoint for more

information on how to disable it.

[root@consul1 ~]# cat /var/consul/node-id

ff3a4ce5-0505-a9ef-d99a-718b1514ad8b

[root@consul1 ~]# cd /var/consul/

[root@consul1 consul]# ls -l

total 8

-rw-r–r–. 1 consul consul 394 Nov 2 18:17 checkpoint-signature

-rw——-. 1 consul consul 36 Nov 2 18:17 node-id

drwx——. 2 consul consul 27 Nov 2 18:44 proxy

drwxr-xr-x. 3 consul consul 56 Nov 2 18:17 raft

drwx——. 2 consul consul 94 Nov 2 18:17 serf

[root@consul1 consul]# ls -lR

.:

total 8

-rw-r–r–. 1 consul consul 394 Nov 2 18:17 checkpoint-signature

-rw——-. 1 consul consul 36 Nov 2 18:17 node-id

drwx——. 2 consul consul 27 Nov 2 18:44 proxy

drwxr-xr-x. 3 consul consul 56 Nov 2 18:17 raft

drwx——. 2 consul consul 94 Nov 2 18:17 serf

./proxy:

total 4

-rw——-. 1 consul consul 26 Nov 2 18:44 snapshot.json

./raft:

total 152

-rwxr-xr-x. 1 consul consul 2318 Nov 2 18:17 peers.info

-rw——-. 1 consul consul 262144 Nov 2 19:02 raft.db

drwxr-xr-x. 2 consul consul 6 Nov 2 18:44 snapshots

./raft/snapshots:

total 0

./serf:

total 16

-rw——-. 1 consul consul 28 Nov 2 18:17 local.keyring

-rw-r–r–. 1 consul consul 369 Nov 2 18:44 local.snapshot

-rw——-. 1 consul consul 28 Nov 2 18:17 remote.keyring

-rw-r–r–. 1 consul consul 359 Nov 2 18:44 remote.snapshot

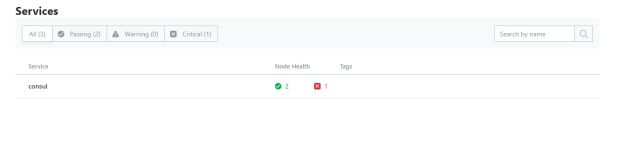

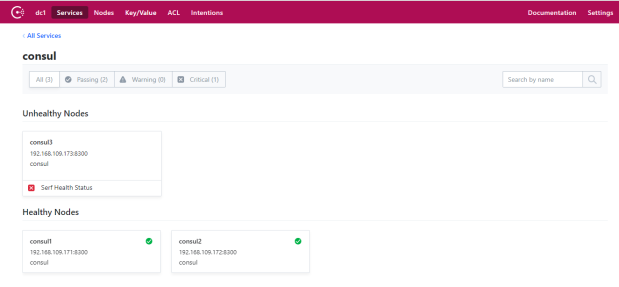

Now i am going to manually shutdown the node 3

[root@consul1 consul]# consul members

Node Address Status Type Build Protocol DC Segment

consul1 192.168.109.171:8301 alive server 1.3.0 2 dc1 <all>

consul2 192.168.109.172:8301 alive server 1.3.0 2 dc1 <all>

consul3 192.168.109.173:8301 failed server 1.3.0 2 dc1 <all>

[root@consul1 consul]# consul info

agent:

check_monitors = 0

check_ttls = 0

checks = 0

services = 0

build:

prerelease =

revision = e8757838

version = 1.3.0

consul:

bootstrap = false

known_datacenters = 1

leader = true

leader_addr = 192.168.109.171:8300

server = true

raft:

applied_index = 516

commit_index = 516

fsm_pending = 0

last_contact = 0

last_log_index = 516

last_log_term = 6

last_snapshot_index = 0

last_snapshot_term = 0

latest_configuration = [{Suffrage:Voter ID:d8c0d164-7ff0-f45f-bcf3-beea2e98e239 Address:192.168.109.173:8300} {Suffrage:Voter ID:ff3a4ce5-0505-a9ef-d99a-718b1514ad8b Address:192.168.109.171:8300} {Suffrage:Voter ID:8b472dc7-f7b8-825a-0600-4cf8152ecbf7 Address:192.168.109.172:8300}]

latest_configuration_index = 1

num_peers = 2

protocol_version = 3

protocol_version_max = 3

protocol_version_min = 0

snapshot_version_max = 1

snapshot_version_min = 0

state = Leader

term = 6

runtime:

arch = amd64

cpu_count = 1

goroutines = 106

max_procs = 1

os = linux

version = go1.11.1

serf_lan:

coordinate_resets = 0

encrypted = true

event_queue = 0

event_time = 4

failed = 0

health_score = 0

intent_queue = 0

left = 0

member_time = 7

members = 3

query_queue = 0

query_time = 1

serf_wan:

coordinate_resets = 0

encrypted = true

event_queue = 0

event_time = 1

failed = 0

health_score = 0

intent_queue = 0

left = 0

member_time = 5

members = 3

query_queue = 0

query_time = 1