Make sure you have nodes ready with 3gb memory & 2cpu each .

[root@localhost ~]# hostnamectl set-hostname master.example.com

[root@localhost ~]# bash

[root@master ~]#

[root@master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO=static

IPADDR=192.168.109.10

GATEWAY=192.168.109.2

[root@master ~]# service network restart

[root@master ~]# vim /etc/hosts

192.168.109.10 master.example.com master

192.168.109.11 node1.example.com node1

192.168.109.12 node2.example.com node2

Do the above steps for the nodes as well with host-names and ip’s as per requirement.

[root@master ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:D4dImhyIvgSG39O2NjfuRj5ZofrFfPMQdTJDQCCvqXw root@master.example.com

The key’s randomart image is:

+—[RSA 2048]—-+

| . .oo.. |

|.. . o . |

|+.. . . . = .|

|+. o * . +. . = |

| o. * + S…. |

|. . + oo*. . |

| . *+Eo= + |

| ..==o . + |

| ++. . |

+—-[SHA256]—–+

[root@master ~]# ssh-copy-id master

[root@master ~]# ssh-copy-id node1

[root@master ~]# ssh-copy-id node2

[root@master ~]# yum install docker -y;systemctl start docker ; systemctl enable docker ;yum install -y wget git net-tools bind-utils yum-utils iptables-services bridge-utils bash-completion kexec-tools sos psacct ; yum install epel-release -y ; yum install ansible pyOpenSSL -y;

[root@master ~]# sed -i -e “s/^enabled=1/enabled=0/” /etc/yum.repos.d/epel.repo

[root@master ~]# git clone -b release-3.9 https://github.com/openshift/openshift-ansible.git

[root@master ~]# echo “” > /etc/ansible/hosts

[root@master ~]# vim /etc/ansible/hosts

[OSEv3:children]

masters

nodes

etcd

nfs[OSEv3:vars]

ansible_ssh_user=root

deployment_type=origin

containerized=true

os_firewall_use_firewalld=true

openshift_clock_enabled=true

openshift_release=v3.9.0

openshift_public_hostname=192.168.109.10

openshift_master_default_subdomain=192.168.109.10.nip.ioopenshift_disable_check=memory_availability,disk_availability,docker_storage,docker_image_availability

[masters]

master[nodes]

master openshift_node_labels=”{‘region’: ‘infra’,’zone’: ‘default’}” openshift_schedulable=true

node1 openshift_node_labels=”{‘region’: ‘primary’, ‘zone’: ‘east’}”

node2 openshift_node_labels=”{‘region’: ‘primary’, ‘zone’: ‘west’}”[etcd]

master[nfs]

master

#########################################

[root@master ~]# ansible -m ping all

node1 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

master | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

node2 | SUCCESS => {

“changed”: false,

“ping”: “pong”

}

[root@master ~]# ansible-playbook ~/openshift-ansible/playbooks/prerequisites.yml

FATAL: Current Ansible version (2.4.2.0) is not supported. Supported versions: 2.4.3.0 or newer

If any have any issue with version of ansible which you are running , do uninstall old version, and have latest version.

[root@master ~]# yum remove ansible

https://cbs.centos.org/koji/packageinfo?packageID=1947

[root@master ~]# yum install http://cbs.centos.org/kojifiles/packages/python-keyczar/0.71c/2.el7/noarch/python-keyczar-0.71c-2.el7.noarch.rpm

[root@master ~]# wget http://cbs.centos.org/kojifiles/packages/ansible/2.4.3.0/1.el7/noarch/ansible-2.4.3.0-1.el7.noarch.rpm

[root@master ~]# yum install ansible-2.4.3.0-1.el7.noarch.rpm

[root@master ~]# ansible –version

ansible 2.4.3.0

config file = /etc/ansible/ansible.cfg

configured module search path = [u’/root/.ansible/plugins/modules’, u’/usr/share/ansible/plugins/modules’]

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /usr/bin/ansible

python version = 2.7.5 (default, Jul 13 2018, 13:06:57) [GCC 4.8.5 20150623 (Red Hat 4.8.5-28)]

Make sure you have done with inventory file

[root@master ~]# ansible-playbook ~/openshift-ansible/playbooks/prerequisites.yml

.

.

.

.

.

.

localhost : ok=12 changed=0 unreachable=0 failed=0

master : ok=75 changed=13 unreachable=0 failed=0

node1 : ok=63 changed=13 unreachable=0 failed=0

node2 : ok=63 changed=13 unreachable=0 failed=0

INSTALLER STATUS *********************************************************************************************************************************************************************

Initialization : Complete (0:00:21)

#######################

Below is the verbose output log.

prerequisites-verbose

[root@master ~]# ansible-playbook ~/openshift-ansible/playbooks/deploy_cluster.yml

TASK [Set Management install ‘Complete’] *********************************************************************************************************************************************

skipping: [master]

PLAY RECAP ***************************************************************************************************************************************************************************

localhost : ok=14 changed=0 unreachable=0 failed=0

master : ok=633 changed=260 unreachable=0 failed=0

node1 : ok=141 changed=58 unreachable=0 failed=0

node2 : ok=141 changed=57 unreachable=0 failed=0

INSTALLER STATUS *********************************************************************************************************************************************************************

Initialization : Complete (0:00:24)

Health Check : Complete (0:00:03)

etcd Install : Complete (0:02:17)

NFS Install : Complete (0:00:13)

Master Install : Complete (0:05:54)

Master Additional Install : Complete (0:00:40)

Node Install : Complete (0:16:14)

Hosted Install : Complete (0:01:28)

Web Console Install : Complete (0:00:38)

Service Catalog Install : Complete (0:02:54)

Below is the verbose log

[root@master ~]# docker –version

Docker version 1.13.1, build 6e3bb8e/1.13.1

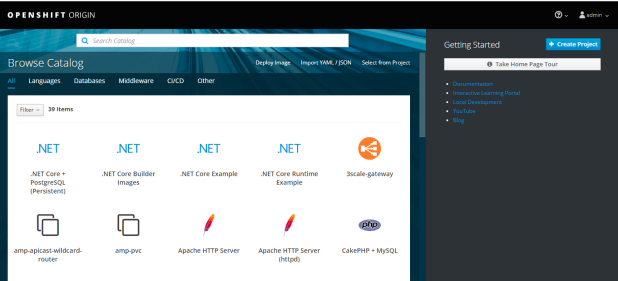

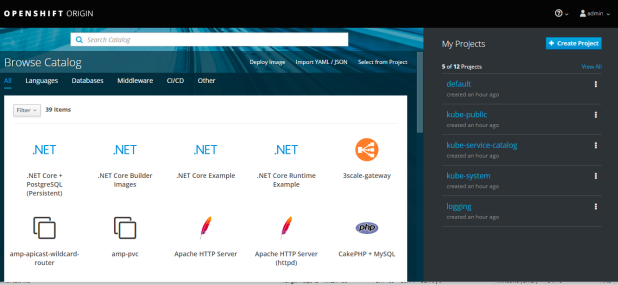

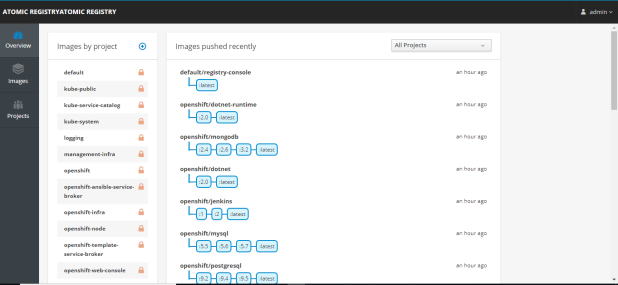

login into ocp console

https://192.168.109.10:8443/console/

admin

admin

[root@master ~]# oc get rolebindings

NAME ROLE USERS GROUPS SERVICE ACCOUNTS SUBJECTS

system:deployers /system:deployer deployer

system:image-builders /system:image-builder builder

system:image-pullers /system:image-puller system:serviceaccounts:default

[root@master ~]# oc get users

NAME UID FULL NAME IDENTITIES

admin 7105a404-c155-11e8-8a19-000c29a4327b allow_all:admin

root d2e0293e-c15b-11e8-a5d4-000c29a4327b allow_all:root

[root@master ~]# oc adm policy add-cluster-role-to-user cluster-admin admin

cluster role “cluster-admin” added: “admin”

[root@master ~]# oc get nodes

NAME STATUS ROLES AGE VERSION

master.example.com Ready master 14m v1.9.1+a0ce1bc657

node1.example.com Ready compute 2m v1.9.1+a0ce1bc657

node2.example.com Ready compute 2m v1.9.1+a0ce1bc657

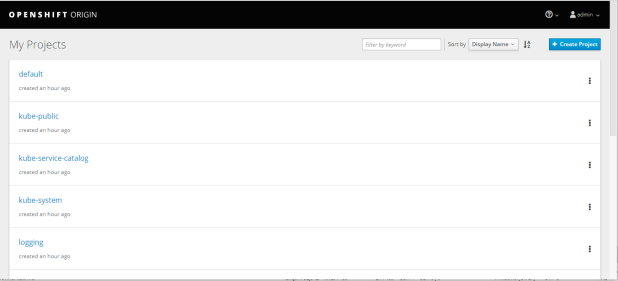

[root@master ~]# oc get ns

NAME STATUS AGE

default Active 19m

kube-public Active 19m

kube-system Active 19m

logging Active 1m

management-infra Active 17m

openshift Active 19m

openshift-infra Active 19m

openshift-node Active 19m

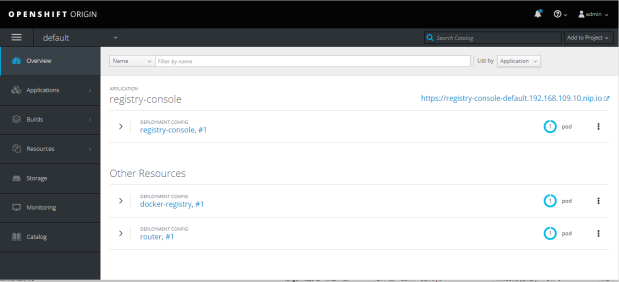

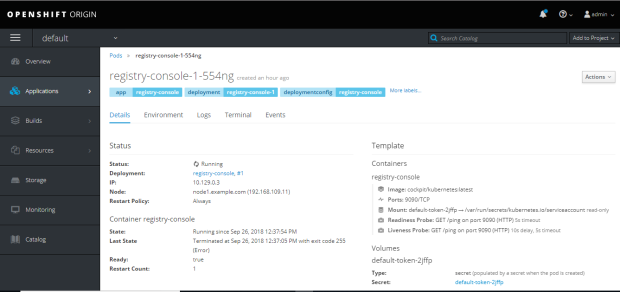

[root@master ~]# oc get pods

NAME READY STATUS RESTARTS AGE

docker-registry-1-nhclf 1/1 Running 0 4m

registry-console-1-554ng 1/1 Running 0 3m

router-1-r9slq 1/1 Running 0 4m

[root@master ~]# oc get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

docker-registry-1-nhclf 1/1 Running 1 1h 10.128.0.14 master.example.com

registry-console-1-554ng 1/1 Running 1 1h 10.129.0.3 node1.example.com

router-1-r9slq 1/1 Running 1 1h 192.168.109.10 master.example.com

[root@master ~]# oc get pods -o wide –all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

default docker-registry-1-nhclf 1/1 Running 1 1h 10.128.0.14 master.example.com

default registry-console-1-554ng 1/1 Running 1 1h 10.129.0.3 node1.example.com

default router-1-r9slq 1/1 Running 1 1h 192.168.109.10 master.example.com

kube-service-catalog apiserver-pxv6r 1/1 Running 1 1h 10.128.0.16 master.example.com

kube-service-catalog controller-manager-vnnnj 1/1 Running 3 1h 10.128.0.15 master.example.com

openshift-ansible-service-broker asb-1-deploy 0/1 Error 0 1h <none> master.example.com

openshift-ansible-service-broker asb-etcd-1-deploy 0/1 Error 0 1h <none> master.example.com

openshift-template-service-broker apiserver-dbqjz 1/1 Running 1 59m 10.128.0.17 master.example.com

openshift-web-console webconsole-5f649b49b5-xcncn 1/1 Running 1 1h 10.128.0.12 master.example.com

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:a4:32:7b brd ff:ff:ff:ff:ff:ff

inet 192.168.109.10/24 brd 192.168.109.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::24c9:7d:23e3:a577/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:57:68:3b:0e brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever

4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

link/ether f6:0e:12:f6:02:86 brd ff:ff:ff:ff:ff:ff

8: br0: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN qlen 1000

link/ether fe:55:98:d1:86:41 brd ff:ff:ff:ff:ff:ff

9: vxlan_sys_4789: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65470 qdisc noqueue master ovs-system state UNKNOWN qlen 1000

link/ether 72:e9:1f:42:71:9f brd ff:ff:ff:ff:ff:ff

inet6 fe80::70e9:1fff:fe42:719f/64 scope link

valid_lft forever preferred_lft forever

10: tun0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN qlen 1000

link/ether f6:56:c7:61:d7:61 brd ff:ff:ff:ff:ff:ff

inet 10.128.0.1/23 brd 10.128.1.255 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::f456:c7ff:fe61:d761/64 scope link

valid_lft forever preferred_lft forever

11: veth4442e269@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP

link/ether 7e:b9:41:de:c2:2e brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::7cb9:41ff:fede:c22e/64 scope link

valid_lft forever preferred_lft forever

13: vethc8a2ca35@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP

link/ether 6e:c3:28:37:17:f0 brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::6cc3:28ff:fe37:17f0/64 scope link

valid_lft forever preferred_lft forever

14: vethf4f897b4@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP

link/ether ce:6a:17:b6:57:6e brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::cc6a:17ff:feb6:576e/64 scope link

valid_lft forever preferred_lft forever

15: veth42815355@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP

link/ether 26:e1:cf:48:50:08 brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::24e1:cfff:fe48:5008/64 scope link

valid_lft forever preferred_lft forever

16: vethbc177b31@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP

link/ether 82:12:8e:11:67:b3 brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::8012:8eff:fe11:67b3/64 scope link

valid_lft forever preferred_lft forever

[root@master ~]# oc get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

docker-registry ClusterIP 172.30.49.186 <none> 5000/TCP 4m

kubernetes ClusterIP 172.30.0.1 <none> 443/TCP,53/UDP,53/TCP 23m

registry-console ClusterIP 172.30.17.153 <none> 9000/TCP 3m

router ClusterIP 172.30.245.247 <none> 80/TCP,443/TCP,1936/TCP 4m

[root@master ~]# oc get routes

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

docker-registry docker-registry-default.192.168.109.10.nip.io docker-registry <all> passthrough None

registry-console registry-console-default.192.168.109.10.nip.io registry-console <all> passthrough None

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/ansibleplaybookbundle/origin-ansible-service-broker v3.9 d2d0238644e8 4 days ago 474 MB

registry.fedoraproject.org/latest/etcd latest 6c1ecdb769b7 2 months ago 313 MB

docker.io/openshift/origin-web-console v3.9.0 aa12a2fc57f7 3 months ago 495 MB

docker.io/openshift/origin-docker-registry v3.9.0 8e6f7a854d66 3 months ago 465 MB

docker.io/openshift/openvswitch v3.9.0 d0cc33de4239 3 months ago 1.49 GB

docker.io/openshift/node v3.9.0 7cd7ee7d43cc 3 months ago 1.46 GB

docker.io/openshift/origin-haproxy-router v3.9.0 448cc9658480 3 months ago 1.28 GB

docker.io/openshift/origin-deployer v3.9.0 39ee47797d2e 3 months ago 1.26 GB

docker.io/openshift/origin v3.9.0 4ba9c8c8f42a 3 months ago 1.26 GB

docker.io/openshift/origin-service-catalog v3.9.0 96cf7dd047cb 3 months ago 296 MB

docker.io/openshift/origin-template-service-broker v3.9.0 be41388b9fcb 3 months ago 308 MB

docker.io/openshift/origin-pod v3.9.0 6e08365fbba9 3 months ago 223 MB

[root@master ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

214464594b15 96cf7dd047cb “/usr/bin/service-…” 16 minutes ago Up 16 minutes k8s_controller-manager_controller-manager-vnnnj_kube-service-catalog_54cd296a-c155-11e8-8a19-000c29a4327b_3

8344e88aa3f9 96cf7dd047cb “/usr/bin/service-…” 16 minutes ago Up 16 minutes k8s_apiserver_apiserver-pxv6r_kube-service-catalog_50df6133-c155-11e8-8a19-000c29a4327b_1

831f05575845 be41388b9fcb “/usr/bin/template…” 16 minutes ago Up 16 minutes k8s_c_apiserver-dbqjz_openshift-template-service-broker_80e694bb-c155-11e8-8a19-000c29a4327b_1

83ae343567fa openshift/origin-pod:v3.9.0 “/usr/bin/pod” 16 minutes ago Up 16 minutes k8s_POD_apiserver-pxv6r_kube-service-catalog_50df6133-c155-11e8-8a19-000c29a4327b_1

a701b56dac0b openshift/origin-pod:v3.9.0 “/usr/bin/pod” 16 minutes ago Up 16 minutes k8s_POD_apiserver-dbqjz_openshift-template-service-broker_80e694bb-c155-11e8-8a19-000c29a4327b_1

67d7acd3c74e 8e6f7a854d66 “/bin/sh -c ‘/usr/…” 16 minutes ago Up 16 minutes k8s_registry_docker-registry-1-nhclf_default_07eefb7c-c155-11e8-8a19-000c29a4327b_1

a48adc823db2 openshift/origin-pod:v3.9.0 “/usr/bin/pod” 16 minutes ago Up 16 minutes k8s_POD_controller-manager-vnnnj_kube-service-catalog_54cd296a-c155-11e8-8a19-000c29a4327b_1

cdba093d7099 aa12a2fc57f7 “/usr/bin/origin-w…” 16 minutes ago Up 16 minutes k8s_webconsole_webconsole-5f649b49b5-xcncn_openshift-web-console_20f9d368-c155-11e8-8a19-000c29a4327b_1

f1929d75d666 448cc9658480 “/usr/bin/openshif…” 16 minutes ago Up 16 minutes k8s_router_router-1-r9slq_default_fd642fe8-c154-11e8-8a19-000c29a4327b_1

abe784d3d9b1 openshift/origin-pod:v3.9.0 “/usr/bin/pod” 16 minutes ago Up 16 minutes k8s_POD_docker-registry-1-nhclf_default_07eefb7c-c155-11e8-8a19-000c29a4327b_1

6e05b738ba6a openshift/origin-pod:v3.9.0 “/usr/bin/pod” 16 minutes ago Up 16 minutes k8s_POD_webconsole-5f649b49b5-xcncn_openshift-web-console_20f9d368-c155-11e8-8a19-000c29a4327b_1

a6c8c089f8bf openshift/origin-pod:v3.9.0 “/usr/bin/pod” 16 minutes ago Up 16 minutes k8s_POD_router-1-r9slq_default_fd642fe8-c154-11e8-8a19-000c29a4327b_1

bd82db30fce7 openshift/node:v3.9.0 “/usr/local/bin/or…” 17 minutes ago Up 17 minutes origin-node

0b9a7e5fe4d2 openshift/origin:v3.9.0 “/usr/bin/openshif…” 17 minutes ago Up 17 minutes origin-master-controllers

b2229aa2e10d openshift/origin:v3.9.0 “/usr/bin/openshif…” 17 minutes ago Up 17 minutes origin-master-api

fed7e3a4d541 registry.fedoraproject.org/latest/etcd “/usr/bin/etcd” 17 minutes ago Up 17 minutes etcd_container

b8e6c4182a87 openshift/openvswitch:v3.9.0 “/usr/local/bin/ov…” 17 minutes ago Up 17 minutes openvswitch

[root@master ~]# netstat -tlpn |grep openshift

tcp 0 0 127.0.0.1:53 0.0.0.0:* LISTEN 8041/openshift

tcp 0 0 0.0.0.0:8053 0.0.0.0:* LISTEN 4610/openshift

tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 4610/openshift

tcp 0 0 0.0.0.0:8444 0.0.0.0:* LISTEN 7828/openshift

tcp6 0 0 :::10250 :::* LISTEN 8041/openshift

tcp6 0 0 :::1936 :::* LISTEN 9642/openshift-rout

tcp6 0 0 :::10256 :::* LISTEN 8041/openshift

[root@master ~]# netstat -tlpn |grep etcd

tcp 0 0 192.168.109.10:2379 0.0.0.0:* LISTEN 33363/etcd

tcp 0 0 192.168.109.10:2380 0.0.0.0:* LISTEN 33363/etcd

[root@master ~]# netstat -tlpn |grep haproxy

tcp 0 0 127.0.0.1:10443 0.0.0.0:* LISTEN 15425/haproxy

tcp 0 0 127.0.0.1:10444 0.0.0.0:* LISTEN 15425/haproxy

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 15425/haproxy

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 15425/haproxy

[root@master ~]# systemctl status origin-master-api

● origin-master-api.service – Atomic OpenShift Master API

Loaded: loaded (/etc/systemd/system/origin-master-api.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-09-26 11:40:51 IST; 8min ago

Docs: https://github.com/openshift/origin

Main PID: 33317 (docker-current)

CGroup: /system.slice/origin-master-api.service

[root@master ~]# systemctl status origin-master-controllers

● origin-master-controllers.service – Atomic OpenShift Master Controllers

Loaded: loaded (/etc/systemd/system/origin-master-controllers.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-09-26 11:41:01 IST; 8min ago

[root@master ~]# systemctl status origin-node

● origin-node.service

Loaded: loaded (/etc/systemd/system/origin-node.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-09-26 11:41:16 IST; 8min ago

Main PID: 34639 (docker-current)

[root@master ~]# systemctl status origin-node-dep

● origin-node-dep.service

Loaded: loaded (/etc/systemd/system/origin-node-dep.service; static; vendor preset: disabled)

Active: inactive (dead) since Wed 2018-09-26 11:41:06 IST; 9min ago

Main PID: 34626 (code=exited, status=0/SUCCESS)

Sep 26 11:41:06 master.example.com systemd[1]: Started origin-node-dep.service.

Sep 26 11:41:06 master.example.com systemd[1]: Starting origin-node-dep.service…

[root@master ~]# oc describe node node1.example.com

Name: node1.example.com

Roles: compute

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=node1.example.com

node-role.kubernetes.io/compute=true

region=primary

zone=east

Annotations: volumes.kubernetes.io/controller-managed-attach-detach=true

Taints: <none>

CreationTimestamp: Wed, 26 Sep 2018 11:52:48 +0530

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

—- —— —————– —————— —— ——-

OutOfDisk False Wed, 26 Sep 2018 12:42:50 +0530 Wed, 26 Sep 2018 12:37:27 +0530 KubeletHasSufficientDisk kubelet has sufficient disk space available

MemoryPressure False Wed, 26 Sep 2018 12:42:50 +0530 Wed, 26 Sep 2018 12:37:27 +0530 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Wed, 26 Sep 2018 12:42:50 +0530 Wed, 26 Sep 2018 12:37:27 +0530 KubeletHasNoDiskPressure kubelet has no disk pressure

Ready True Wed, 26 Sep 2018 12:42:50 +0530 Wed, 26 Sep 2018 12:37:38 +0530 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.109.11

Hostname: node1.example.com

Capacity:

cpu: 2

memory: 2854624Ki

pods: 20

Allocatable:

cpu: 2

memory: 2752224Ki

pods: 20

System Info:

Machine ID: ef3d928fa5c240f78270c57a96cad856

System UUID: 06994D56-C736-3036-89AD-C6551F5379B7

Boot ID: dc7450b1-d809-45d0-943e-0a79344d11a2

Kernel Version: 3.10.0-693.21.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://1.13.1

Kubelet Version: v1.9.1+a0ce1bc657

Kube-Proxy Version: v1.9.1+a0ce1bc657

ExternalID: node1.example.com

Non-terminated Pods: (1 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits

——— —- ———— ———- ————— ————-

default registry-console-1-554ng 0 (0%) 0 (0%) 0 (0%) 0 (0%)

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

CPU Requests CPU Limits Memory Requests Memory Limits

———— ———- ————— ————-

0 (0%) 0 (0%) 0 (0%) 0 (0%)

Events:

Type Reason Age From Message

—- —— —- —- ——-

Normal Starting 50m kubelet, node1.example.com Starting kubelet.

Normal NodeAllocatableEnforced 50m kubelet, node1.example.com Updated Node Allocatable limit across pods

Normal NodeHasSufficientDisk 39m (x3 over 50m) kubelet, node1.example.com Node node1.example.com status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 39m (x3 over 50m) kubelet, node1.example.com Node node1.example.com status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 39m (x3 over 50m) kubelet, node1.example.com Node node1.example.com status is now: NodeHasNoDiskPressure

Normal NodeReady 39m (x2 over 49m) kubelet, node1.example.com Node node1.example.com status is now: NodeReady

Normal Starting 5m kubelet, node1.example.com Starting kubelet.

Normal NodeAllocatableEnforced 5m kubelet, node1.example.com Updated Node Allocatable limit across pods

Normal NodeHasSufficientDisk 5m (x2 over 5m) kubelet, node1.example.com Node node1.example.com status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 5m (x2 over 5m) kubelet, node1.example.com Node node1.example.com status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 5m (x2 over 5m) kubelet, node1.example.com Node node1.example.com status is now: NodeHasNoDiskPressure

Warning Rebooted 5m kubelet, node1.example.com Node node1.example.com has been rebooted, boot id: dc7450b1-d809-45d0-943e-0a79344d11a2

Normal NodeNotReady 5m kubelet, node1.example.com Node node1.example.com status is now: NodeNotReady

Normal NodeReady 5m kubelet, node1.example.com Node node1.example.com status is now: NodeReady

[root@master ~]# cat .kube/config

apiVersion: v1

clusters:

– cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM2akNDQWRLZ0F3SUJBZ0lCQVRBTkJna3Foa2lHOXcwQkFRc0ZBREFtTVNRd0lnWURWUVFEREJ0dmNHVnUKYzJocFpuUXRjMmxuYm1WeVFERTFNemM1TkRFNU16UXdIaGNOTVRnd09USTJNRFl3TlRNMFdoY05Nak13T1RJMQpNRFl3TlRNMVdqQW1NU1F3SWdZRFZRUUREQnR2Y0dWdWMyaHBablF0YzJsbmJtVnlRREUxTXpjNU5ERTVNelF3CmdnRWlNQTBHQ1NxR1NJYjNEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURSYWtpb29td1RZWEJOeUdUcFhJc0IKNGI0YVpGQnVWd0k4RnhXOHJCSVRYTnNPYyt5MGNFVlowbkxXVVFRWFhvTUt3UGRrM1czdzM4T3lDM3l1bkhkbwp6WTIwTmJlSHM5YjNnMnJFaUpMbncreU1xSG9qZmcrR1NUQWJMOUJIOGVnbXZ6cGFYODVFdTUwSVgzSXlVZVNKCldCTkZ1ZHg0Z0lLMnNLak1JYWpDUVNsVnFpZW9odGY5SW9rcDU3M1Jwa2JqWXZxL2M3aUQ1SkE3RUJqRnNHWmEKNXIwWmNWNUNQVGxEUjgxblQyeWcxZVhMU05PV3pFczdqdG1lSnoyaWc2S29Ka1hjQjA2U0RVYmZ2SE5mQ25uTAp4ZURraGdIa3c2K2tOR3BuQ01QV1kzSUxwK0FpeGpWdGNTREFTZUUzT1ZNQ1pJWXFkNDFKZXdmWVVuRGZEVmpMCkFnTUJBQUdqSXpBaE1BNEdBMVVkRHdFQi93UUVBd0lDcERBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUEwR0NTcUcKU0liM0RRRUJDd1VBQTRJQkFRQlFVc2d0bXlKUjZCSWdDVE5iRm9vcjBCWjBIMEw3ZThKMWVZaE5rOStXTjBxVwpBODd1dGEybjdydktCKzB6QTZ5cG84YWFNM2QyY2xrT0lYRklZY0RsSS9aSHdxTFJUWWpaWG9EL3h3UXprVFozCjRySURjWWNwZGxjUWdxeFdWRlloRElqUDI2MERwdVpTNEExNGJwNkJvUWRjN0VxS0F2S3cxNG5WZ0hsV3hDRlMKWU9nbk9CVXBwV243NncxREMxQWIyazJFNkZvQ3Q2aFRiV2g0Wlh4VzlIQmxVRVR2TjNEenNrcDkvS2duQTdyVAo1anQ1cFJQSTlXN0dXOFkwMVN3cC8zeW1JaTJQalNVS1BSTmcyUU82OVZGdnhaNytWbWo0K1kyKzZ4TkpjUEt3CnpPTlVTcldUVE5DdzdVYllmdjkyVHVSNmtFdVMzNFV3ZWN0SVlLK2UKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.109.10:8443

name: 192-168-109-10:8443

– cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM2akNDQWRLZ0F3SUJBZ0lCQVRBTkJna3Foa2lHOXcwQkFRc0ZBREFtTVNRd0lnWURWUVFEREJ0dmNHVnUKYzJocFpuUXRjMmxuYm1WeVFERTFNemM1TkRFNU16UXdIaGNOTVRnd09USTJNRFl3TlRNMFdoY05Nak13T1RJMQpNRFl3TlRNMVdqQW1NU1F3SWdZRFZRUUREQnR2Y0dWdWMyaHBablF0YzJsbmJtVnlRREUxTXpjNU5ERTVNelF3CmdnRWlNQTBHQ1NxR1NJYjNEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURSYWtpb29td1RZWEJOeUdUcFhJc0IKNGI0YVpGQnVWd0k4RnhXOHJCSVRYTnNPYyt5MGNFVlowbkxXVVFRWFhvTUt3UGRrM1czdzM4T3lDM3l1bkhkbwp6WTIwTmJlSHM5YjNnMnJFaUpMbncreU1xSG9qZmcrR1NUQWJMOUJIOGVnbXZ6cGFYODVFdTUwSVgzSXlVZVNKCldCTkZ1ZHg0Z0lLMnNLak1JYWpDUVNsVnFpZW9odGY5SW9rcDU3M1Jwa2JqWXZxL2M3aUQ1SkE3RUJqRnNHWmEKNXIwWmNWNUNQVGxEUjgxblQyeWcxZVhMU05PV3pFczdqdG1lSnoyaWc2S29Ka1hjQjA2U0RVYmZ2SE5mQ25uTAp4ZURraGdIa3c2K2tOR3BuQ01QV1kzSUxwK0FpeGpWdGNTREFTZUUzT1ZNQ1pJWXFkNDFKZXdmWVVuRGZEVmpMCkFnTUJBQUdqSXpBaE1BNEdBMVVkRHdFQi93UUVBd0lDcERBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUEwR0NTcUcKU0liM0RRRUJDd1VBQTRJQkFRQlFVc2d0bXlKUjZCSWdDVE5iRm9vcjBCWjBIMEw3ZThKMWVZaE5rOStXTjBxVwpBODd1dGEybjdydktCKzB6QTZ5cG84YWFNM2QyY2xrT0lYRklZY0RsSS9aSHdxTFJUWWpaWG9EL3h3UXprVFozCjRySURjWWNwZGxjUWdxeFdWRlloRElqUDI2MERwdVpTNEExNGJwNkJvUWRjN0VxS0F2S3cxNG5WZ0hsV3hDRlMKWU9nbk9CVXBwV243NncxREMxQWIyazJFNkZvQ3Q2aFRiV2g0Wlh4VzlIQmxVRVR2TjNEenNrcDkvS2duQTdyVAo1anQ1cFJQSTlXN0dXOFkwMVN3cC8zeW1JaTJQalNVS1BSTmcyUU82OVZGdnhaNytWbWo0K1kyKzZ4TkpjUEt3CnpPTlVTcldUVE5DdzdVYllmdjkyVHVSNmtFdVMzNFV3ZWN0SVlLK2UKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://master.example.com:8443

name: master-example-com:8443

contexts:

– context:

cluster: 192-168-109-10:8443

namespace: default

user: system:admin/master-example-com:8443

name: default/192-168-109-10:8443/system:admin

– context:

cluster: master-example-com:8443

namespace: default

user: system:admin/master-example-com:8443

name: default/master-example-com:8443/system:admin

current-context: default/master-example-com:8443/system:admin

kind: Config

preferences: {}

users:

– name: system:admin/master-example-com:8443

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURKRENDQWd5Z0F3SUJBZ0lCQmpBTkJna3Foa2lHOXcwQkFRc0ZBREFtTVNRd0lnWURWUVFEREJ0dmNHVnUKYzJocFpuUXRjMmxuYm1WeVFERTFNemM1TkRFNU16UXdIaGNOTVRnd09USTJNRFl3TlRNMldoY05NakF3T1RJMQpNRFl3TlRNM1dqQk9NVFV3SEFZRFZRUUtFeFZ6ZVhOMFpXMDZZMngxYzNSbGNpMWhaRzFwYm5Nd0ZRWURWUVFLCkV3NXplWE4wWlcwNmJXRnpkR1Z5Y3pFVk1CTUdBMVVFQXhNTWMzbHpkR1Z0T21Ga2JXbHVNSUlCSWpBTkJna3EKaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF6T0oxVEtzWCtHRDZ0K050TFV0SmUrNHlpLzcwaFRMSwpUZG9nckpma2lWemZLYmc2NDVyM3V4UzhZRk8yZVYyMGhCeklEZS9scjE1QmRKaGwvT1pyYTN0K1hXRlpCTWk2CjlBcVdMYnhJZ2YyMjJKYm01bkhKdnRGNEo1ek1LNWtxbUlYSVZOd2UyU05pK2JRR3FDeVJSVytTTkc4MHl6eWIKVXY3UlhaSXRMQklnVXRiSkFoZ1FYYzJWZGtFOTVEZXRnQUovWXJEUTdHTU5LWkdzcSt1N0FLMGFFaDhGSzlIMwpvVEtOb2p6aDdGQlFYelpLdE9HbzU5cGlLRHd3L0pOeTJaSmlzQlZDNGh2akZYTEpiSEN4T3B1Q3cvYUpha1VmCnY3enZXd0d6dlFWTmEvSU1NcDZzWVI3V3BzaEw2alVxZE1IaFFWY1hlSk1qeU1RR3M5Y2dNd0lEQVFBQm96VXcKTXpBT0JnTlZIUThCQWY4RUJBTUNCYUF3RXdZRFZSMGxCQXd3Q2dZSUt3WUJCUVVIQXdJd0RBWURWUjBUQVFILwpCQUl3QURBTkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQVA0aUw5U1Q5V1c5OXFQcWswQ0pBR0NoZk5JdUZNUkVtCnZDT3E0RlIrZXpaNlBHNjlZRnJpNEM1RkgzTktqZXhPdGpJQysySDF6dkoxenI2ZnJ2Y3lXbDZCdzhEdzY0Z2IKWlFsMWdramVER3NUNmZ5VHZoeWpDWW9YUUphSFIvVjBnY1FSbHU3VjE0bzR4OFoxRjdQc3UyQUVlV0szakVnbgpQM05rd0MvaGxyVlVXaG1jNDFmejRweDhaSys5eFFOQ3VqUzhBVEpBRUFQSnBUYVQyZE9kTm12VkpHNmM1RGN6CmUyc3pidTN3bmNhMEIxeXNzUzZkVEFZTTllSWhHUjJ2VWhvU0gyRVo0cjc2RDFENXU5blF5Qm00VzgzbGEveHYKU1czazI3bHVYalVDK2JQRlllV0hWam1QYjJwemJSZTRHWlZEOVBINGhLajVtZGJCejJlQWd3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBek9KMVRLc1grR0Q2dCtOdExVdEplKzR5aS83MGhUTEtUZG9nckpma2lWemZLYmc2CjQ1cjN1eFM4WUZPMmVWMjBoQnpJRGUvbHIxNUJkSmhsL09acmEzdCtYV0ZaQk1pNjlBcVdMYnhJZ2YyMjJKYm0KNW5ISnZ0RjRKNXpNSzVrcW1JWElWTndlMlNOaStiUUdxQ3lSUlcrU05HODB5enliVXY3UlhaSXRMQklnVXRiSgpBaGdRWGMyVmRrRTk1RGV0Z0FKL1lyRFE3R01OS1pHc3ErdTdBSzBhRWg4Rks5SDNvVEtOb2p6aDdGQlFYelpLCnRPR281OXBpS0R3dy9KTnkyWkppc0JWQzRodmpGWExKYkhDeE9wdUN3L2FKYWtVZnY3enZXd0d6dlFWTmEvSU0KTXA2c1lSN1dwc2hMNmpVcWRNSGhRVmNYZUpNanlNUUdzOWNnTXdJREFRQUJBb0lCQUVWd3ExamsxQ2IybDRNagpyYWtnVHpPVnM4UUhFVkRqdWZWUTdLb1NnUDZkWDNXQVgxVXMvTEdIZ2FFVVBsQThGaWFBcXIwdWhhWStSK2tBCmpmQjlHQU5Cdzc1YWRCVlBBeTRiT0hNZjBXTmRYazlpTmJmODhPZWZqeDI5NHVVVDhIL3BOOUNyR2psMTZPSEEKeGxEUmFoc2lpV2NFR2R5WUdmeXpLTHFTQklWZXM1cHNOQllwMjVvM2hmWDFCdjRjaDZwb2NoN3dTOGVQbGdFYgpoRFJMY3BtZnlXUmVSNHI3a3pCazNSUGQ2QWxRK1hhcVo5NU1ZKzcwc3NCSmRyWi9NbG1mQWZocm1nTmRDQWxBCnBMMERGYU5meHZzRjl0UlZnMWxoTnFrelhGUmdOY0g5d0RnUU9yK3pUQVBqaUxWWUMrZ0lIa0Z2VU9vaTM5WUsKYm9oVGdvRUNnWUVBK0k0MkwxUVB0QVN3Nk1MTWNTN0cxeVN4YThlR1N0NHpVUWE2b2xReUc0RFVlUUw4ZGJGLwpCb2F4L203REJ5cHlHMks1UGVwMmZaejhNbDl4OWNKaXZkNFk1dG9YZkR6S2ZRQ3NQSElna2pWeGVMay8zRDVWCkJSM1ZhVWVhcWFDdXptbmFHTUg1TW9rdHhBUVgwMWRlSDR3V0pTampaUGhVd2Vaamk3Y1lia01DZ1lFQTB3Vm0KMmtsVFFUMWVnVTE5OW55QzQzWXhRcnFJZmlDdUxDZHV2bzN4c2VHbFBOVlQ3NVdVZVFLZkErdkJGUDM0NmVROQpvYkNCdTZTUzFtM0lvUnlHY2dpd29CL0gvbkN1Y0JyYVpib0EreUFHWURnRktsc2tQNGF5dk1VbFBFV1huTTl2CkdsUWF4V2Q2TGxvSi9oNWhNWXB3ajM5c2hrWHRseDdoc2d2amYxRUNnWUI5ZkZiUTJEREJZdWpwNm9jSzBXSGoKOW90NGJaaFlMZ3hjYVBoS3doVTJHM21weXA4bzBEN2dUWnFKYU9RZnR3YzYya0hKaDVqZzNDUGJUcUtiUDlOWQpKa3dPS0tkWXV0eEQ5ZFgvQW1OOVRXd0hWZ2R1cXkyRFVzZU95bTdFR3ZLR0ZaemRpUGpGMGNvQVAwekVEMFRlCnludlhzT2YwN3diamlleFIrbE5rUHdLQmdIMzlscWd6NldKbFdyeUc0UE0rNmdNVytWaG0rTUdkajRCTFZ1S3MKNnlhU204NlRiQmI2enZmbEtiMzBqNGFTRUlETlJrTDRtS3pKR09hd0MzNnVBbE9wZnBOTUJtT1RNWU03ZFRRUgpkeTU0czNYVlhMZ3FUSjBsTmloZzZOZHdrWklOZzc5TGdlUms0TjAxNHd0M1pmNW5Nc2RxaEFnelRpVFJTbDI4Cm5XclJBb0dBTlZhY0QyOW5wVHRmRjVyeDJKMGJmd1oySkZwWmVVNkk4NDJyVXZaTElnZkZOdjhONEpqS1l5SWQKZjkyaWFtUmNFRlZMZXZsdUlqek1HVjI2Q2dPZ2h4QkxYRURydHNqUmNrYldWejVhcnI0V0RWV0F1YWZlSmtNcwpKN3c2TzNNT0YwS2EvWGVRSG9nS09SRzVtampnaE1TQmtDSlRXd0hmc21wVlBad1dIWGc9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

[root@master ~]# oc get

You must specify the type of resource to get. Valid resource types include:

* all

* buildconfigs (aka ‘bc’)

* builds

* certificatesigningrequests (aka ‘csr’)

* clusterrolebindings

* clusterroles

* componentstatuses (aka ‘cs’)

* configmaps (aka ‘cm’)

* controllerrevisions

* cronjobs

* customresourcedefinition (aka ‘crd’)

* daemonsets (aka ‘ds’)

* deployments (aka ‘deploy’)

* deploymentconfigs (aka ‘dc’)

* endpoints (aka ‘ep’)

* events (aka ‘ev’)

* horizontalpodautoscalers (aka ‘hpa’)

* imagestreamimages (aka ‘isimage’)

* imagestreams (aka ‘is’)

* imagestreamtags (aka ‘istag’)

* ingresses (aka ‘ing’)

* groups

* jobs

* limitranges (aka ‘limits’)

* namespaces (aka ‘ns’)

* networkpolicies (aka ‘netpol’)

* nodes (aka ‘no’)

* persistentvolumeclaims (aka ‘pvc’)

* persistentvolumes (aka ‘pv’)

* poddisruptionbudgets (aka ‘pdb’)

* podpreset

* pods (aka ‘po’)

* podsecuritypolicies (aka ‘psp’)

* podtemplates

* projects

* replicasets (aka ‘rs’)

* replicationcontrollers (aka ‘rc’)

* resourcequotas (aka ‘quota’)

* rolebindings

* roles

* routes

* secrets

* serviceaccounts (aka ‘sa’)

* services (aka ‘svc’)

* statefulsets (aka ‘sts’)

* storageclasses (aka ‘sc’)

* userserror: Required resource not specified.

Use “oc explain <resource>” for a detailed description of that resource (e.g. oc explain pods).

See ‘oc get -h’ for help and examples.

[root@master ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 38G 4.7G 34G 13% /

devtmpfs devtmpfs 1.4G 0 1.4G 0% /dev

tmpfs tmpfs 1.4G 0 1.4G 0% /dev/shm

tmpfs tmpfs 1.4G 2.5M 1.4G 1% /run

tmpfs tmpfs 1.4G 0 1.4G 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 165M 850M 17% /boot

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/eb7b373ef430c8b1baeeeeffc02f0e693b6fa48748b9b1552b1fa6b839f8eaaf/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/9d5303e4886a144108c72a00c6eee75d14590ff839369efadcdafd62e1fd43a2/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/7a53fad28e9c987b45a5f512760a359af2c2ad2dff3c65580f979c398f7ab934/merged

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/fed7e3a4d541325cef439725c44c816dd6c146f8a16cd4079acac31db044c938/shm

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/b2229aa2e10d8c2c73f75a47885dea5356b6f165a7a5394b99f05b73a14a7f0a/shm

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/b8e6c4182a87130391b427c6c7c0fa3e5c2c36c63b96374d03ddaa0f4a3ed76e/shm

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/5bd46881dacf0f25afbc9764c48d1aa1ddb2465e08fbacb4fafe9631a5436849/merged

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/0b9a7e5fe4d28f5350eb409dcadc125888866da979ad6b447f47c915bc96bd46/shm

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/57b635668084421ac151053f1170e09d855a65ed860215a70c9d4a596fa92c84/merged

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/bd82db30fce74359ff5630a54b404a29917c2ce3b018990d2d4c623e4c92f013/shm

tmpfs tmpfs 1.4G 4.0K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/54cd296a-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/service-catalog-ssl

tmpfs tmpfs 1.4G 16K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/fd642fe8-c154-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/router-token-vbdlh

tmpfs tmpfs 1.4G 16K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/20f9d368-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/webconsole-token-q5nrr

tmpfs tmpfs 1.4G 8.0K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/50df6133-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/apiserver-ssl

tmpfs tmpfs 1.4G 8.0K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/20f9d368-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/serving-cert

tmpfs tmpfs 1.4G 12K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/fd642fe8-c154-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/server-certificate

tmpfs tmpfs 1.4G 16K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/50df6133-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/service-catalog-apiserver-token-mhnsx

tmpfs tmpfs 1.4G 16K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/54cd296a-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/service-catalog-controller-token-xpvll

tmpfs tmpfs 1.4G 16K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/07eefb7c-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/registry-token-f6kph

tmpfs tmpfs 1.4G 8.0K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/80e694bb-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/serving-cert

tmpfs tmpfs 1.4G 8.0K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/07eefb7c-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/registry-certificates

tmpfs tmpfs 1.4G 16K 1.4G 1% /var/lib/origin/openshift.local.volumes/pods/80e694bb-c155-11e8-8a19-000c29a4327b/volumes/kubernetes.io~secret/apiserver-token-2smvw

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/ad8d1b9db8bc0516043ad76547e3b3b2f72d9a6858f72fa426b55bed67577b5d/merged

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/a6c8c089f8bff4697f58a5964f774357b7184f21fef8df6d9f45ff013af0a043/shm

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/e2e25b4a97f1b94f66de6f315d69f126164afc23096f5d4483dd5ae647784bf8/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/9ea77355a758fb56db75f2eb28651e4325e2b2133decfe1f6e5efe3c771b7f6c/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/44fde121c03dc6e281891b5c97a428bfaab976955de3de1ee8b551fc98895a95/merged

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/abe784d3d9b1207f9b5b5d470434072b725dc3a679475e5d26f0ebb80867026e/shm

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/6e05b738ba6a54138e7c8b9d3920166fc509c88585029a49ed3d21a5510de719/shm

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/f59889735a6ae9d17de0f3a56cff910d9ff73c57e4d9d009ed91856a1282a2cd/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/98a9f480752761ac017081a0ac0ed4e242c469729b3d8444e7acbd2260636e41/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/33a708c18e140aa0802924dae11af918d5811d039c32b28565f386f160aee312/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/2898f8be9a1107a5046b9a97150dc3a66ca5413b581cbe303e966f4850c16d5f/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/bea3e09568fa1c5f5582a99c33a6dc445797164334ce561da486fafc9cc09971/merged

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/a48adc823db2b5623290df233ce1653cf1c4d50b4d88dc7ed79ffba078a5837d/shm

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/a701b56dac0bb93f8b905267c2b42f4c9764f342712a065d2a9b1c3b16adce6f/shm

shm tmpfs 64M 0 64M 0% /var/lib/docker/containers/83ae343567fa5049cd9024e83bdc7f6d151c433b029bb5031341f4467b7aee21/shm

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/97393d83f06eb6da0e8585a37bfb837dbdc8385b0dee09d2c001473c4a956f0d/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/e6ca6ca185b9bfcd4b9459c8cff61dcc52568114b8649d82451ff91de7d24032/merged

overlay overlay 38G 4.7G 34G 13% /var/lib/docker/overlay2/1cd9340f75a09f9579cb540240eca1724dc48e7d803869c3a0b40a788fe04582/merged

tmpfs tmpfs 279M 0 279M 0% /run/user/0

[root@node1 ~]# netstat -tlpn |grep openshift

tcp 0 0 127.0.0.1:53 0.0.0.0:* LISTEN 4389/openshift

tcp6 0 0 :::10250 :::* LISTEN 4389/openshift

tcp6 0 0 :::10256 :::* LISTEN 4389/openshift

[root@node1 ~]# netstat -tlpn |grep dns

tcp 0 0 10.129.0.1:53 0.0.0.0:* LISTEN 1125/dnsmasq

tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 1125/dnsmasq

tcp 0 0 192.168.109.11:53 0.0.0.0:* LISTEN 1125/dnsmasq

tcp6 0 0 fe80::a822:7dff:fe26:53 :::* LISTEN 1125/dnsmasq

tcp6 0 0 fe80::60d8:88ff:fe83:53 :::* LISTEN 1125/dnsmasq

tcp6 0 0 fe80::4880:31ff:feb3:53 :::* LISTEN 1125/dnsmasq

tcp6 0 0 fe80::e06a:a91:5699::53 :::* LISTEN 1125/dnsmasq

[root@node1 ~]# systemctl status origin-node

● origin-node.service

Loaded: loaded (/etc/systemd/system/origin-node.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-09-26 12:37:26 IST; 18min ago

Process: 4267 ExecStartPost=/usr/bin/sleep 10 (code=exited, status=0/SUCCESS)

Process: 4264 ExecStartPre=/usr/bin/dbus-send –system –dest=uk.org.thekelleys.dnsmasq /uk/org/thekelleys/dnsmasq uk.org.thekelleys.SetDomainServers array:string:/in-addr.arpa/127.0.0.1,/cluster.local/127.0.0.1 (code=exited, status=0/SUCCESS)

[root@node1 ~]# docker –version

Docker version 1.13.1, build 6e3bb8e/1.13.1

One thought on “openshift 3.9 cluster set up”